Double Decoder VAE

David Frühbuß

6/25/20251 min read

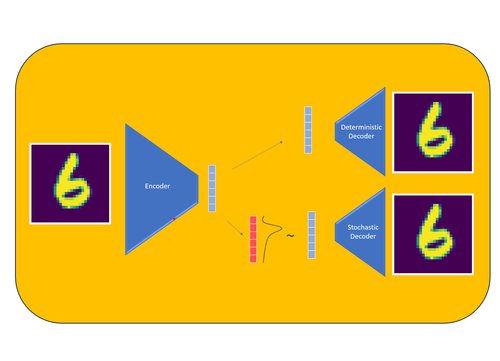

Discrete latent spaces in Variational Autoencoders (VAEs) offer more interpretable representations but are challenging to train due to non-differentiable sampling. We propose the Double Decoder VAE (DD-VAE), which introduces a second deterministic decoder to approximate the stochastic decoder’s process, enabling effective training without relying on continuous relaxations like Gumbel Softmax. Our method uses Dirichlet sampling to explore the latent space and aims to preserve the discrete nature of the representation. Initial results on binarized MNIST show promising interpretability, though performance still falls short of Gumbel Softmax in terms of reconstruction quality.