Blackboxes Meet Blackboxes Across the World: A Multilingual Analysis ofLLMs’ and Human Linguistic Information Processing

David Frühbuß

5/8/20241 min read

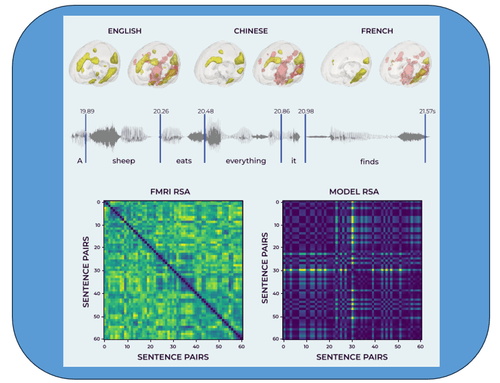

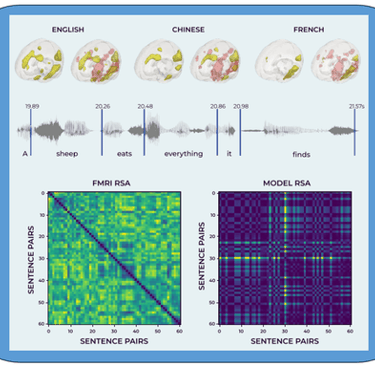

As large models reach human-like capabilitiesespecially in the domain of natural languages itbecomes increasingly interesting to understandwhether these models process information thesame way that humans do. In the past, brainrepresentations have been compared to the representations of large language models. In thiswork we extend this line of work by comparingbrain and model representations across threelanguages. For the brain representations, weuse fMRI scans of the Le Petit Prince (Li et al.,2021) dataset. We obtain language model representations from a multilingual XLM-R modeland three monolingual models. We use Representational Similarity Analysis (RSA) to compare the representations across models, subjectand languages. We find that the multilingualmodels have a distinct two step structure intheir representation and are more similar to thebrain representations than the representation ofmonolingual models.